adversarial environment

…other. When dealing with an intractable adversarial environment, consider the [[the iceberg model of systems|system structure]] (e.g. incentives, [[mental models]]) that gives rise…

Tended 4 years ago (7 times) Planted 4 years ago Mentioned 1 time

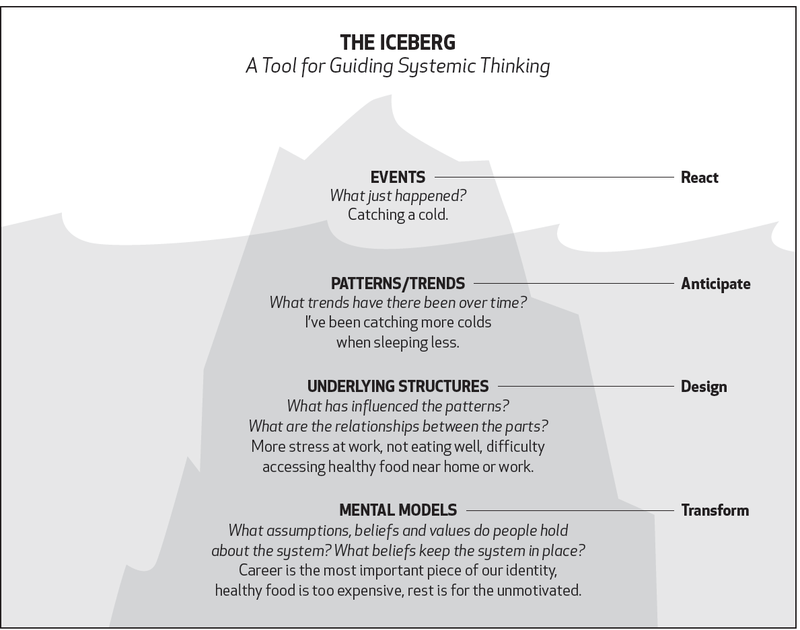

The iceberg model is a way of looking at the behavior of systems at increasing levels of abstraction in order to understand the underlying causes of that behavior. I’ve found it useful to apply this model to the sociotechnical systems I am a part of at work.

Events are empirical observations of things that happen, e.g. a service outage occurs, a deadline is missed, someone quits. Events can, of course, be good things like almost having a service outage (but a system prevented it) or finishing something ahead of schedule. (As appreciative inquiry points out, we’re biased toward investigating systems only when they fail, but we can learn just as much from systems working as intended.)

Noticing Patterns is the first level of insight we can have about a system. Perhaps we’re having repeated service outages for similar reasons, or one team is particularly prone to missing deadlines. Or on the bright side, perhaps one team is shipping faster with fewer bugs. I used to think that identifying a pattern was the end of the story, that knowledge of a pattern and its ill effects was enough to make the participants in the system change their behavior. But that rarely works, leading to frustration and resentment—“Why do people keep making suboptimal choices?!”, or as I often hear, “Why don’t people care about X?”—until you realize that patterns of behavior are the result of…

The Underlying structure of the system: its goals, rules, incentives, communication channels, habits, and physical barriers. For instance, you might point out that technical debt is accumulating at an unsustainable rate and even get others to agree, but if your team is incentivized to only work on new features it will be hard to get anyone to change their behavior for long. In order to make sustainable change within a system, you have to change the structure of the system.

Mental models are the assumptions, beliefs, and values which mutually reinforce the structure of the system. In order to alter the structure of a system (or get rid of it entirely), you have to change the ideas that give rise to it. As Robert Pirsig in Zen and the Art of Motorcycle Maintenance describes:

If a factory is torn down but the rationality which produced it is left standing, then that rationality will simply produce another factory. If a revolution destroys a government, but the systematic patterns of thought that produced that government are left intact, then those patterns will reproduce themselves… There’s so much talk about the system. And so little understanding.

For instance, one system structure we used to have at work is a policy that all database changes needed to be approved by a subset of senior engineers. As you might imagine, this policy arose after a number of service outages resulting from unsafe (long-running and locking) database operations. So the belief that gave rise to it was something like “It’s dangerous to allow all engineers to make unsupervised database changes in production.” After introducing the policy, it soon became clear that these architecture reviews weren’t happening promptly (there were so many that the review board was overwhelmed). But even after realizing that these reviews were costing us a lot of time and delay, we resisted changing the system out of the belief that it was dangerous to stop. It wasn’t until we eventually reduced the cost of failure by introducing a time limit for database changes so they could take the app down for at most a few seconds that we were able to convince everyone to get rid of this policy.

This example also illustrates how system and structure and mental models are interdependent. If you don’t trust all engineers to change the database schema, you might start requiring approval before they do, which sends a message to engineers that they can’t be trusted to change the database schema. In this way, even those negatively impacted by the system might come to adopt the mental models which sustain it.

…other. When dealing with an intractable adversarial environment, consider the [[the iceberg model of systems|system structure]] (e.g. incentives, [[mental models]]) that gives rise…